The Use of AI is getting embedded in the day-to-day work of many Belgian and Dutch lawyers. But how do the Bars look at this? Both the Flemish Bar Association (OVB) and the Dutch Bar Association(NOvA) have recently published clear guidelines on the use of AI in the legal profession. They confirm that AI can be a valuable tool for lawyers – but they also set strict conditions.

This blog gives you a practical overview of those AI guidelines in Belgium and the Netherlands - and shows how an AI workspace like Alice fits seamlessly within them.

The core message: AI is allowed, but the lawyer stays in control

The Flemish and Dutch guidelines start from the same basic position:

- Using AI is neither prohibited nor mandatory.

- It falls within the lawyer’s own freedom and responsibility.

AI is explicitly recognised as a supporting tool, as long as it is used in line with the core values of the profession: independence, partisanship, competence, integrity and confidentiality.

Whether you are a litigation firm in Brussels or Amsterdam, the message is identical: the lawyer remains ultimately responsible for every opinion, every pleading and every procedural document. AI may support the work, but it can never take decisions in the lawyer’s place.

AI guidelines in Belgium: how the OVB looks at AI in the legal profession

Duty of competence and understanding AI

The OVB explicitly states that a lawyer must understand the basics of artificial intelligence and Large Language Models (LLMs). AI systems recognise patterns in large datasets, LLMs generate text based on probabilities - and anyone using them should know what that means in practice.

At the same time, the OVB underlines that all traditional professional rules remain fully applicable whenever AI is used:

article 455 of the Belgian Judicial Code and the Codex Deontologie on competent representation, professional secrecy, independence, partisanship and conflicts of interest.

A very practical consequence: the lawyer must read and understand the terms of use of any AI tool - including how data are trained, transferred and stored, where the processing takes place, whether the system is open or closed, and what the rules are on liability and intellectual property.

Data protection and professional secrecy

On data protection, the OVB sets a very high bar for publicly available AI-tools:

- The lawyer should pseudonymise personal data as much as possible.

- As a rule, the lawyer should not enter personal data into prompts, input fields or other AI tool documents.

- If processing personal data is truly essential (for instance, recording a meeting to generate minutes), the lawyer must be transparent and have a proper legal basis (such as explicit consent).

- Professional secrecy remains absolute: information or documents covered by professional secrecy or confidentiality should not be fed into AI tools - except in the exceptional case where the lawyer is absolutely certain that the tool runs in a fully closed environment with adequate safeguards (e.g. within the firm’s own perimeter, without any external data sharing).

Robots, chatbots and liability

The OVB does leave room for chatbots and automation within Belgian law firms, but imposes clear conditions:

- Users must understand that they are interacting with an automated AI system.

- The lawyer remains fully responsible for the AI output they use. All traditional liability principles continue to apply.

AI guidelines in the Netherlands: NOvA recommendations for responsible AI use

First understand, then apply

The NOvA stresses that basic knowledge of (generative) AI is essential for every lawyer. It encourages lawyers to follow training on LLM fundamentals, prompt-engineering, hallucinations, bias mitigation, error detection, AI regulation and cybersecurity.

Just like in Belgium, the lawyer must:

- gain practical experience with AI tools;

- always verify AI output;

- preferably work with tools that show clear source references, so that case law, legislation and facts can be checked easily.

Confidentiality and data flows

The NOvA is extremely clear when it comes to confidentiality regarding the use of publicy available AI-tools:

- Do not enter confidential information into free, public AI tools.

- Only share information that is strictly necessary and document your reasons for using AI.

The NOvA also calls for:

- Data Protection Impact Assessments (DPIAs) whenever personal data are processed via AI;

- transparency about where data are stored and processed (country, subcontractors, security measures);

- careful vendor selection based on contractual terms regarding data ownership, IP rights, liability, exit clauses and vendor lock-in.

Independence, integrity and partisanship

Under the headings independence, integrity and partisanship, the NOvA summarises it neatly: “AI supports, but does not steer.”

- The lawyer remains fully responsible for advice and legal representation; AI is only a tool.

- Firms should adopt a firm-wide AI policy, implement it in practice and communicate clearly to clients about how AI is used.

- Lawyers must remain alert to unfair or biased algorithms that could undermine proper, partisan representation of their clients’ interests.

What this means for your law firm in Belgium or the Netherlands

Taken together, the OVB and NOvA guidelines make one thing very clear: AI use in law firms in Belgium and the Netherlands can no longer be a side project for the one “tech-minded associate”.

Whether your firm is based in Brussels, Antwerp, Ghent, Amsterdam or Rotterdam, you will need:

- a clear internal AI policy (what AI can be used for, which tools are allowed, under what conditions);

- explicit, documented choices on data protection and professional secrecy;

- investment in training and capability-building around AI and LLMs;

- the ability to demonstrate that your use of AI is legally and ethically sound.

The guidelines are not meant to block AI - they’re designed to create a framework that allows Belgian and Dutch lawyers to use AI with more confidence, as long as it’s under their control and within the boundaries of the profession.

All roads lead to Alice: why Alice was built for this landscape

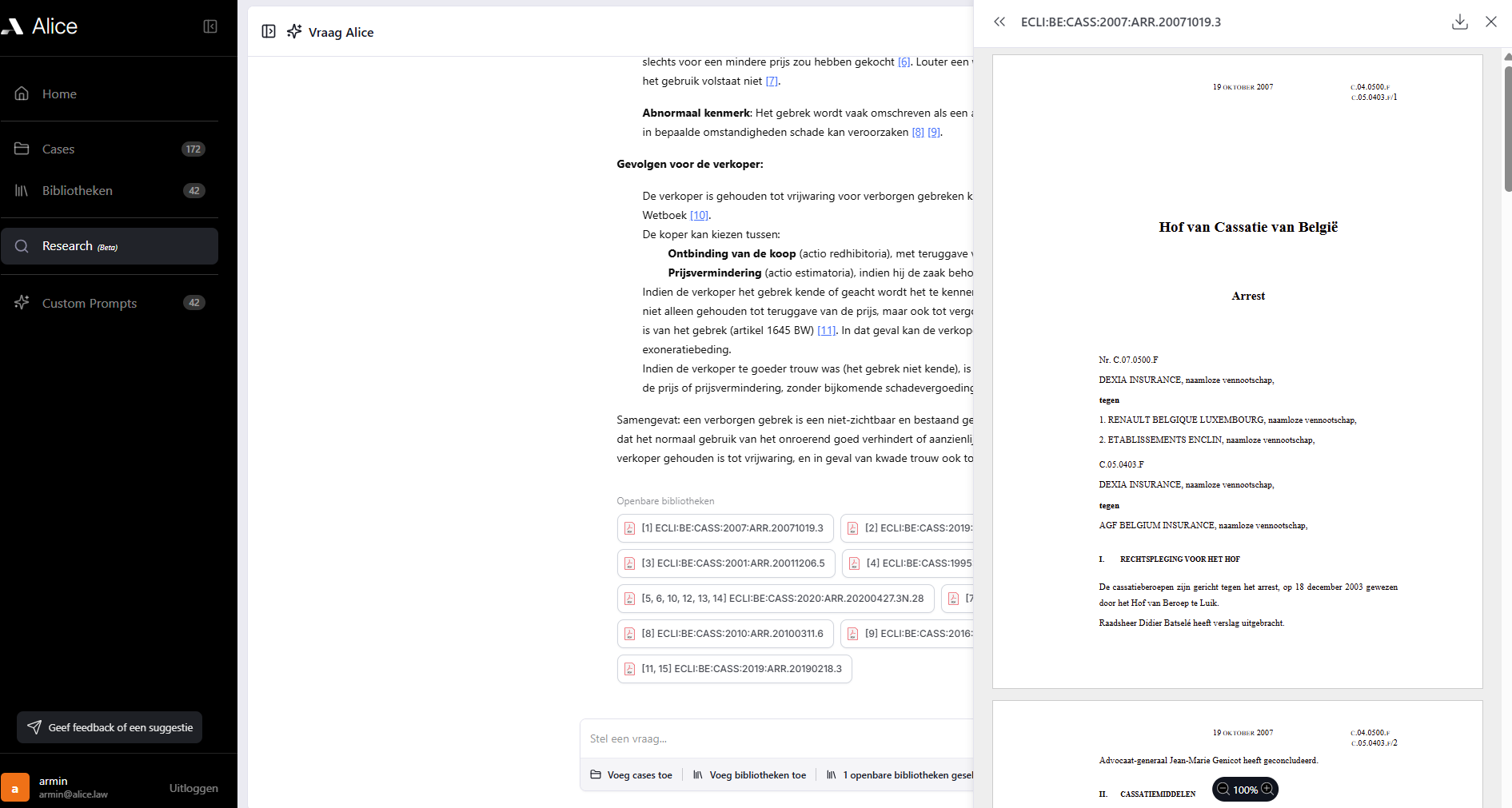

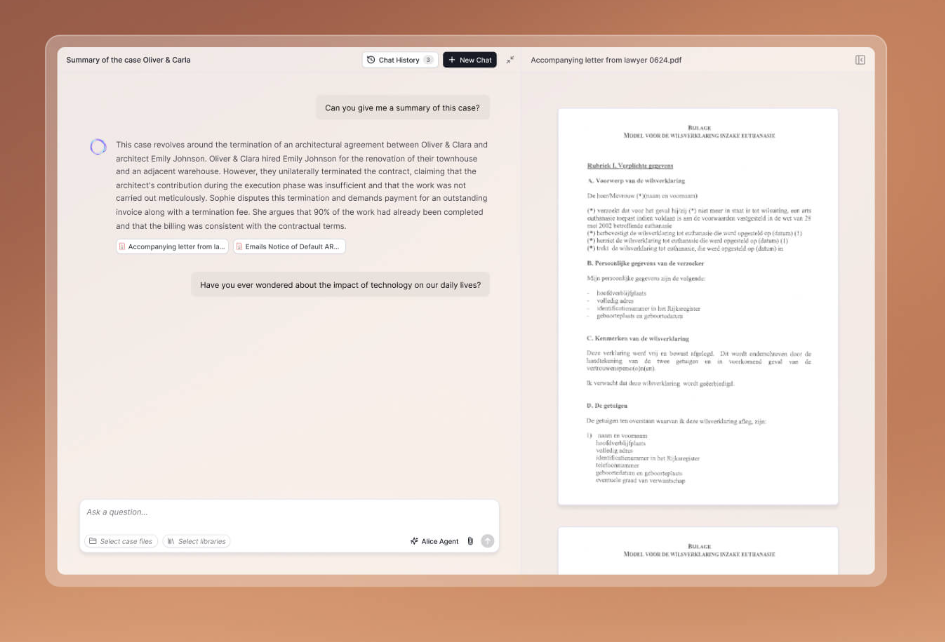

This is exactly where Alice comes in. Alice is not a generic chatbot, but an AI workspace built by lawyers, for lawyers, tailored to litigation and dispute resolution workflows in Belgium and the Netherlands.

The lawyer stays in the driver’s seat

In Alice, you always start from the case file: you upload agreements, evidence and correspondence, and let the AI work with those materials. You decide which documents are in scope, what analyses are made and which passages end up in a draft brief, advice or pleading.

Alice never produces answers in a vacuum. The lawyer remains the author and final decision-maker - exactly in line with the OVB and NOvA position that AI is a tool, not an autonomous actor.

Source transparency and verifiability by design

One of the NOvA’s concrete recommendations is to use tools that provide proper source references, so that AI output can be checked against the underlying material.

That is precisely how Alice works:

- every legal text or analysis created in Alice is traceable back to the underlying documents and sources;

- you see which passages from which documents were used, and you can click straight through to the original;

- that makes it easy to review, adjust and refine any AI-assisted reasoning before it ever reaches a court or client.

This fits perfectly with the OVB’s emphasis that lawyers must verify cited sources and remain critical of generated arguments.

Built with confidentiality as the starting point

Where the guidelines warn against public or free AI tools with unclear data flows, Alice is built on the opposite model:

- Alice runs as a secure firm environment, not as an open public chatbot.

- Firms control how they handle user access and integrations.

- The architecture is designed so that prompts and outputs stay within the firm context, aligning with both Belgian professional secrecy rules and Dutch expectations around data flows and DPIAs.

From ad hoc experiments to firm-wide policy

Both the OVB and NOvA stress the importance of a firm-wide AI policy.

Because Alice operates at the level of the entire firm - with shared workflows, configuration and quality controls - it helps you move from ad hoc, individual experimentation to a structured, policy-driven way of working with AI. Not as a gimmick, but as an integrated and dailypart of your litigation workflows.

Summary

Whether you run a firm in Antwerp or in Amsterdam, the AI guidelines for lawyers in Belgium and the Netherlands now offer a clear framework: AI in the legal profession is allowed, provided you respect the core values, safeguard confidentiality and stay firmly in control.

That is exactly where Alice positions itself: as a legaltech platform that helps Belgian and Dutch law firms integrate AI into their workflow safely, transparently and in line with Bar guidelines - from case analysis and legal research through to argument building and document generation.

All roads lead to Alice.

Meet a new way of working with AI

By lawyers, for lawyers

.jpg)